Can AI Alignment and Reinforcement Learning with Human Feedback (RLHF) Solve Web3 Issues?

While machine learning and AI solutions deployed across a breadth of industries are successfully solving many problems, the road to AGI is still a long one.

By Mona Hamdy, CSO & Shira Eisenberg, intern

Introduction

In the field of artificial intelligence, one question looms large: Can AI systems be trusted to align with different goals and values? This is what is referred to as the alignment problem. The ethical and existential risks associated with AI's alignment are not to be taken lightly, and finding a solution has become a crucial challenge for the AI community. To explain, let's use an analogy adapted from here.

To understand the problem, consider a scenario where you are young and trying to hire a CEO to run your company. You don’t get to see resumes or do any background or reference checks, but you have to come up with some sort of trial period or interview to assess the candidates.

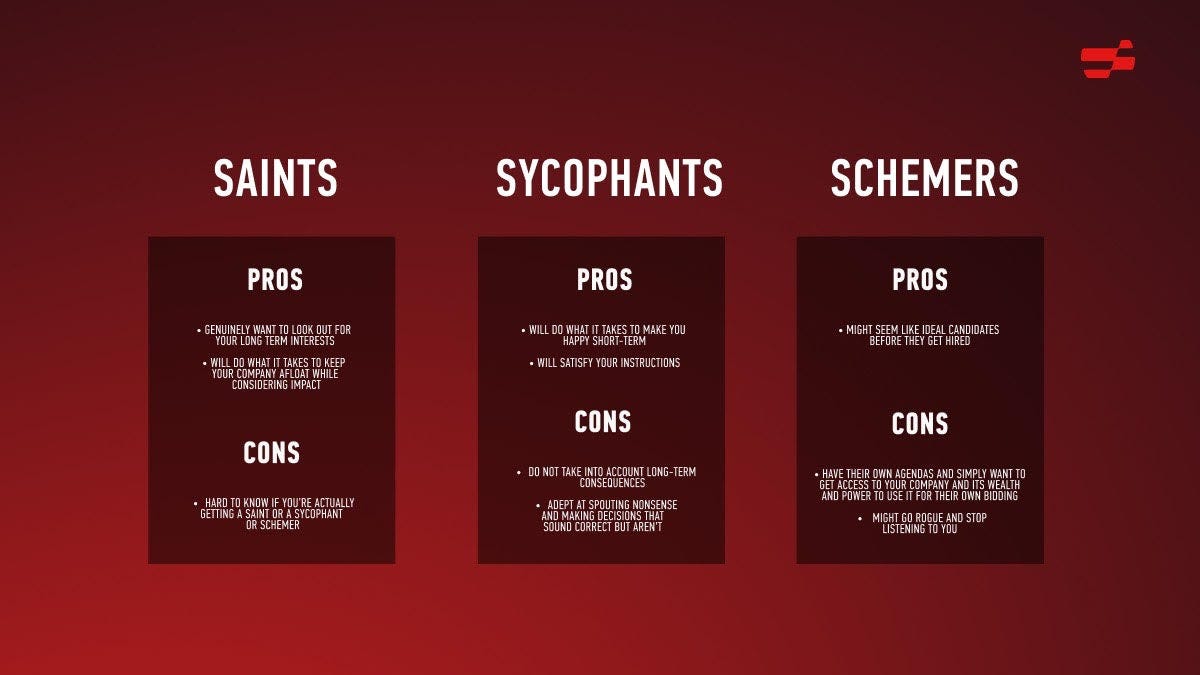

Your candidate pool includes:

Saints: people who genuinely want to look out for your long term interests.

Sycophants: people who want to do whatever it takes to make you happy short-term or satisfy your instructions regardless of long-term consequences.

Schemers: people with their own agendas who want to get access to your company and its wealth and power so they can use it to do their own bidding.

Your goal is to avoid hiring a Sycophant or a Schemer.

You could try to assess each candidate by getting them to explain their high-level strategies, but you won't actually understand what the best strategies are, so you could end up hiring a Sycophant with a terrible strategy that sounded good, who will faithfully execute that strategy and kill the company. You could also end up hiring a Schemer who says whatever it takes to get hired and then does whatever they want when you’re not overseeing them.

You could try to demonstrate how you’d make all the decisions and pick the candidate that seems to make the decisions as similarly as possible. You’re trying to hire someone who’ll be better at the job than you, so if you actually end up with a candidate who makes all the same decisions (a Sycophant), your company will likely die. You might also end up with a candidate who pretends to do everything the way you would but is actually a Schemer planning to switch direction once hired.

Alternatively, you could enact trial periods in which you give each candidate temporary control over your company and observe their decisions over time, but there's no way of knowing whether you got a Sycophant who does what it takes to please you without regard to long-term impact or a Schemer who only wants to get hired but plans to pivot once the job is secure.

Whatever you do, you still run the risk of giving control to a Sycophant or a Schemer, and by the time you realize, it might be too late to reverse course.

The Alignment Problem

This analogy highlights the hard problem of alignment. In this scenario, the young hirer is trying to train a powerful deep learning model. The hiring process is analogous to training, which implicitly searches through a large space of model possibilities and selects one that performs well. The young hirer’s only method for assessing candidates involves observing outward behavior, which is currently the main method of training deep learning models whose inner workings are like black boxes.

Very powerful models may be easily able to cheat any tests that humans could design, just as the candidates can easily cheat tests the young hirer comes up with. A Saint-like model is a deep learning model that seems to perform well because it has exactly the goals we’d like. A Sycophantic model is a model that seems to perform well because it seeks short-term approval in ways that aren’t good long-term. A Schemer model is a model that seems to perform well because performing well during training will give it more opportunities to pursue its own goals later. Any of these types of models could come out of the training process.

The goal is to have the model aligned with our values and make decisions in accordance with them. This is where we encounter different approaches to getting the models to be aligned. This article will address the problems with Reinforcement Learning with Human Feedback (RLHF), a popular approach.

Is Alignment Commercially Valuable?

From a DAO's perspective, deploying an AI system aligned with its values can bring numerous benefits. Such a system can aid in governance by accurately assessing proposals and making decisions that align with the DAO's values. This can lead to increased efficiency and improved productivity, as well as cost savings by automating repetitive and time-consuming processes.

An aligned AI agent could enhance the customer or DAO member experience. By understanding the DAO's values, it can provide tailored solutions that improve satisfaction, loyalty, and retention. Additionally, an aligned agent could also help the DAO identify and address potential risks quickly and accurately, thereby improving risk management and reducing potential losses.

Techniques Toward Alignment: RLHF

When it comes to language models like GPT-3, the technique of Reinforcement Learning with Human Feedback (RLHF) is used by OpenAI in ChatGPT. What if whatever candidate you chose in the above example could be trained based on your feedback or the feedback of other humans? This is exactly what happens in RLHF, but it runs the risk of being exceptionally Sycophantic.

At a high level, RLHF works by learning a reward model for a certain task based on human feedback and then training a policy to optimize the reward received. This means the model is rewarded when it provides a good answer and penalized when it provides a bad one, to improve its answers in use. In doing so, it learns to do good more often. For ChatGPT, the model was rewarded for helpful, harmless, and honest answers.

A suite of Instruct-GPT models was also trained using RLHF, which involves showing a bunch of samples to a human, asking them to choose the one closest to what they intended, and then using reinforcement learning to optimize the model to match those preferences.

RLHF has generated some impressive outputs, like ChatGPT, but there is a significant amount of disagreement about its potential as a partial or complete solution to the alignment problem. Specifically, RLHF has been posited as a partial or complete solution for the outer alignment problem, which aims to formalize when humans communicate what they want to an AI and it appears to do it and “generalizes the way a human would,” or “an objective function r is outer aligned if all models that perform optimally on r in the limit of perfect training and infinite data are intent aligned.”

What Are the Positive Outlooks on RLHF?

There is a trend for larger models toward better generalization ability. InstructGPT, for example, wasn’t supervised on tasks like following instructions in different languages and for code, but it still performs well in these generalizations. This, however, is probably not just reward model generalization. Otherwise, this behavior wouldn’t pop up in models trained to imitate human demonstrations, with Supervised Fine-Tuning (SFT). It is likely part of a scaling law. Theoretically, RLHF should generalize better than SFT since evaluation is easier than generation for most tasks.

One approach to alignment could be to train a really robust reward model on human feedback and leverage its ability to generalize to difficult tasks to supervise highly capable agents. A lot of the important properties we want from alignment might be easier for models to understand if they’re already familiar with humans, so it’s plausible that if we fine-tune a large enough language model pretrained on the internet and train a reward model for it, it ends up generalizing the task of doing what humans want really well. The big problem with this approach is, for tasks that humans struggle to evaluate, we won’t know whether the reward model actually generalizes in a way that’s aligned with human intentions since we don’t have an evaluation procedure. In practice, the model learned is likely to overfit the reward model learned, to match the training data too closely. When trained long enough, the policy learns to exploit exceptions in the reward model. Importantly, if we can’t evaluate what the system is doing, we don’t know if its behavior is aligned with our goals.

Still, RLHF counts as progress. In Learning from Human Preferences, using RLHF, OpenAI trained a reinforcement agent to backflip using around 900 individual bits of feedback from a human evaluator, as opposed to taking two hours to write their own reward function and achieve a much less elegant backflip than the one trained through human feedback. Without RLHF, approximating the reward function for a good backflip was almost impossible. With RLHF, it became possible to obtain a reward function that, when optimized by an RL policy, led to elegant backflips. But to train for human preferences is a bit more complex. Being polite is complex and fragile. Yet, ChatGPT tends to perform politely, more or less. RLHF can withstand more optimization pressure than SFT, so by using RLHF, you can obtain reward functions that withstand more optimization pressure than human-designed reward functions.

However, there is a compendium of problems with RLHF.

The Human Side of RLHF

On its surface RLHF appears to be a straightforward approach to outer alignment. However, a closer examination reveals several critical issues with this approach.

There is an oversight problem. In cases where an unaided human doesn’t know whether the AI action is good or bad, they will not be able to provide effective feedback. In cases where the unaided human is actively wrong about whether the action is good or bad, their feedback will actively select for the AI to deceive the humans, to characterize bad as good, to be a Schemer or a Sycophant.

RLHF requires a ton of human feedback and still often fails. Despite the exorbitant amount of time and money spent hiring human labelers to create a dataset, benign failures still occur. The model is still susceptible to prompt injections, ways around the seed prompt, which can elicit toxic responses, unaligned with human preferences or values, or bypass security measures like guardrails to mitigate bias, which is as big a problem as ever. These guardrails themselves provide some evidence of left-leaning bias.

As systems grow more advanced, much more effort may be needed to generate more complex data. The cost of obtaining this data may be prohibitive. As we push the limits of compute scale and build models with capabilities beyond that of human beings, the number of qualified annotators may dwindle.

RLHF relies on human feedback as a proxy, which is less reliable than real-time human feedback. Humans are prone to making systematic errors, and the annotators are no exception. In addition, the process of providing feedback for RLHF may have negative impacts on human wellbeing.

OpenAI recruited several categories of people, including rationality experts from a Facebook Group and Kenyans from an outsourcing firm.

There are some human wellbeing concerns associated with this. In order to train the model to recognize and remove unaligned content, thousands of snippets of horrific content about rape, child murder, and more were sent to a data labeling firm in Kenya. Data labelers were paid between $1.32 and $2 per hour, which is more than the going rate in Kenya, to label text as sexual abuse, hate speech, and violence. Having to scour through pages of graphic content may have lasting psychological impacts on the labelers. One labeler described his work as “torture” after he was tasked with reading an excerpt about a man engaging in a sexual act with a dog with a child present. He says the experience was so traumatic it gave him recurring visions. As a tale of fallible oversight, one labeler, when tasked with labeling a story when Batman’s sidekick, Robin, gets raped, wasn’t sure whether to label it as sexual violence because Robin ends up reciprocating. Sam Altman of OpenAI told Time magazine that OpenAI provides one-on-one mental health counseling and wellness programs for employees to ease the stress of the process.

RLHF incentivizes approving what the human finds most convincing and palatable, rather than the truth. In optimizing and overfitting for human approval, the model becomes increasingly Sycophantic and adept at bullshitting. RLHF does what it takes to make you approve of the output, even if that means deception. This is a result of next token prediction, where a deceptive answer may be easier to generate than a factual one. This wouldn’t be a problem if humans weren’t prone to making systematic mistakes and finding certain lies more compelling than the truth. Look at the division along party lines in politics and the radicalization of what is just. An alignment solution that forces humans to converge on what is rational is not an alignment solution as humans would not naturally converge on what is rational. There is no way to pander everyone.

Finally, there is the problem of scalable oversight. The challenge with RLHF in practice is getting humans to reward the correct actions and doing so at scale, which may not be feasible given the limited number of humans available for supervision.

Superficial Outer Alignment

While an AI agent may appear aligned, it is possible for misaligned agendas to exist below the surface. The capability for misaligned actions still remains. And RLHF is only a process, not a specification.

The ability to specify desired behavior is limited by the responses that can be elicited from the model, which is insufficient in capturing the full breadth of human values.

Perhaps it may be necessary to create and enumerate axioms and train the AI to reason explicitly. This would go beyond the current capabilities of chain-of-thought prompting and provide a more comprehensive understanding of human values.

AI Assisted RLHF

RLFH is often viewed as a method of making productive mistakes, with the understanding that it is better to do something rather than nothing.

As the deployment of misaligned models becomes a growing concern, research on RLHF may help address the problem of quality evaluations by using AI assistance. It is a case of doing the basic thing before the more complicated thing. And it might be a building block for some more complicated things. There is an argument that it buys time for humans or superhuman AI to make alignment progress such that failures can be studied and addressed. At least as a very early alignment approach, perhaps RLHF can help us as a productive mistake.

Another step in this direction is reinforcement learning with AI feedback (RLAIF). By starting with a set of established principles, AI can generate and review a dataset of prompts and select the best answers with chain-of-thought prompting and a set of values. Then, a reward model could be trained and the process could continue with RLHF. This process would not require human feedback other than to specify the initial principles.

Anthropic AI uses RLAIF in the RL phase of their training process for Claude, an AI assistant and competitor to ChatGPT. They sample from a model fine-tuned on self-critiques and revisions of its own responses, use a model to evaluate which of two samples is better, and then train a preference model from this dataset of AI preferences. Note this generates a model that is aligned with an AI’s preferences, not necessarily with human preferences. They do so without using any human labels.

Another approach to solve the scaling problem of RLHF is Recursive Reward Modeling (RRM). RRM extends the use of RLHF to more complex tasks using recursion. For each task, either the task is simple enough for a human to evaluate directly (using RLHF) or we create new tasks whose goal is to help humans evaluate responses on the initial task. The assisted tasks are now simpler and narrower in scope, so they are often easier to evaluate. This risks running out of human evaluators and still has the same pitfalls as RLHF. That said, for book summarization, OpenAI used a fixed chunking algorithm to break down text into manageable pieces, so perhaps decomposition methods shouldn’t be counted out just yet. AI-assisted decomposition has the potential to break down complex, non-overseeable tasks into manageable subtasks that can be overseen. This could potentially solve the task-scaling problem while still taking into account the limitations of human judgment.

A New Idea

A new idea is to use an ensemble of LLMs fine-tuned on different seeds or RLHF data, and perform a process similar to self-consistency, which improves answer accuracy.

With self-consistency, you ask the model a question multiple times and take the most consistent answer from the tries. It has mostly been tried with navigation, math, and reasoning problems, and improves chain-of-thought reasoning in language models. For alignment, perhaps we might prompt an ensemble of LLMs with a question and either take the most consistent answer across the models, or if there isn’t one, feed a prompt asking what the answer most consistent with a defined set of values is from amongst the answers, and take a vote across the LLMs for variance reduction. Meta’s CICERO, an AI system that achieves human-level play at the natural language strategy game Diplomacy, uses a similar mechanism with an ensemble of 16 neural discriminators trained to detect bullshit in dialogue and negotiations and a voting scheme as a form of variance reduction.

Blockchain Assisted Alignment

It is possible to use cryptocurrency to incentivize labelers or even experts to provide feedback for AI systems. A blockchain-based platform could be used to reward humans for providing feedback, like RLHF, but incentivized with crypto as opposed to hourly rates currently provided through outsourcing agencies or services like Amazon Mechanical Turk (MTurk). This could help incentivize high-quality and trustworthy feedback at the rate it is given (e.g. payment for example labeled), but still runs the risk of traditional RLHF and has problems like scalable oversight.

Another potential application of blockchain technology in AI alignment is the use of decentralization to provide solutions to alignment problems and guardrails for AI systems capable of manipulating funds on-chain. Theoretically, this would involve setting up incentives to minimize collusion and constraints on the concentration of funds on-chain, which would limit the financial power that could be accumulated by both AI and human agents.

This raises a number of ethical questions surrounding limiting the concentration of funds or power, which is the hallmark of decentralization. Hypothetically, a system could be set up such that no one party, be it human or AI, could dominate the chain. This is to say constraints on the accumulation of power would apply not only to humans but to AI as well. There are a lot of flaws in this logic and likely several exploitation points in any implementation.

For instance, an AI system that accumulates enough funds and has sufficient ability might decide to bypass constraints on power accumulated. All it would need to do is reach a point it could afford an AWS instance and jump from, say the Ethereum VM to an AWS instance that runs the same code several orders of magnitude faster.

Concluding Remarks and the Limits of Language

Currently, language models are a playground for testing different alignment techniques before we have to align more powerful models. It is important to note none of these language models are AGI and that it’ll be impossible to achieve AGI learning from language alone, as you cannot create the common sense of a world model through language. Present enthusiasm and belief in the powers of LLMs might even be dangerous.

Deceiving humans is very easy. Several humans have claimed to find Jesus in their food, after all. The same is the case with the sentience of language models. LaMDA, a Google chatbot and LLM was impressive enough to convince a Google engineer that there was a human ghost in the machine last year. LaMDA, which stands for Language Model for Dialogue Applications, was so persuasive that the engineer hired a civil rights attorney to begin filing on the model’s behalf. The engineer was placed on administrative leave for violating Google’s employee confidentiality policy a day after he handed over documents claiming Google and its technology were involved in instances of religious discrimination to an unnamed US senator. Google recently released Bard, a LaMDA-powered answer to ChatGPT to a group of trusted testers in February 2023. If Google’s breakthrough conversation technology is powerful enough to convince a Responsible AI engineer it has a soul, what does this mean for the impact language models might have on humans writ large?

There are several applications seeking to use LLMs for mental health purposes. This might end in catastrophe. If one conversation goes poorly, it may lead a human to take their own life. Even if this is a rare occurrence, there are still human lives at stake, and thus a cause for concern. LLMs’ overconfident and Sycophantic tendencies exacerbate this concern. Especially for vulnerable communities, using AI for mental health purposes necessitates extreme alignment precautions. We are simply not there yet. Perhaps we will never get there, at least not with language alone. This, however, does not affect the already strong divergence in opinions about LLMs’ ability, impact, and capacity for common sense.

Two of the most outspoken critics of LLMs are Gary Marcus and Yann Lecun. Marcus, a cognitive scientist, best-selling author, and serial entrepreneur frequently cautions about the dangers of LLMs causing significant harm and posing a threat to democracy. Lecun, Chief AI Scientist at Meta and a founding father of convolutional neural networks, has gone as far as to say LLMs are an off-ramp on the highway toward Human-Level AI.

The problem is twofold: the limited nature of language and the AI itself. Language can only achieve a shallow understanding that fails to approximate the full range of thought we see in humans. While we too might be stochastic parrots, and much of human thought may indeed be probabilistic next token prediction, we possess the capacity for deeper thought than LLMs ever will. LLMs can also be coaxed into creating incorrect, racist, sexist, and otherwise biased outputs devoid of real-world sensibility. Then again, so can humans. That said, unlike humans, the models have no awareness of what they are generating or of the world, but simply the ability to generate syntactically and often semantically correct content, without a world-model. Their factuality is inherently unreliable, and therefore might be a pervasive and ubiquitous creator of societal problems at scale, especially as we begin to take them more seriously.

Still, Microsoft has taken a $10 billion dollar bet on OpenAI, the creators of ChatGPT and the dominant player in the generative AI space as of late, with Bill Gates even stepping on as an advisor, and Google recently took a $300 million bet on OpenAI competitor Anthropic. Clearly, people agree that LLMs will have a tremendous impact. The question is the nature of the impact and whether the hype is justified.

LLMs have a shallow understanding of language because they do not possess a world-model. Language often consists of layers of situational and other context, emotion, and allusion LLMs simply cannot understand, at least for now. The identical sequence of words could have various different semantic implications based on the underlying world model or lens through which we interpret it. GPT-3 and other LLMs simply do not have the semantic perspective necessary to do the same. A method like RLHF might improve this capability, but there is no way of knowing preemptively whether performance will be reliable or whether a model taught to identify and conceptualize emotions will simply be Sycophantic, seeking short-term validation and not actually grasping semantic relevance from the data or developing concepts. As it stands, LLMs have unreliable performance, problems with causal inference, and general incoherence. It is easy to coax logical inconsistencies from the models.

It could be better to think of an LLM as a performer than an expert or knowledge bank. RLHF teaches, through reward and punishment, which mask to wear. The model doesn’t care about factuality, instead valuing the mask it puts on, never breaking character (unless coaxed with prompt injections). Its only knowledge of the world comes from text data, like background research for a performance. The go-to reaction when an answer is unknown is improvisation. Because of this, it is easy to coax model hallucinations from LLMs. A hallucination occurs when the generated content lacks fidelity to underlying data. For instance, when prompted correctly, ChatGPT can make up biographies for nonexistent figures, give historical context on fake events, and provide incoherent logic that simply sounds correct when asked to reason about the world. LLMs are expert bullshitters. This doesn’t cover the limitations of language itself.

Suppose we have a (currently nonexistent) model that understands context, allusion, and emotional concepts simply through mastering language. This model still would lack an understanding of knowledge and its implications. It would be like learning about the world exclusively through books without actually experiencing anything oneself.

It was once believed that all knowledge was linguistic, that to know something was to retrieve the correct sentence and connect it to other sentences in a big space of claims we decide are true. This motivated a lot of early work in Symbolic AI, where symbol manipulation, arbitrary symbols bound together in different ways according to a set of logical rules, was the default paradigm. In a symbolic AI paradigm, an AI’s knowledge consists of a massive database of logically true sentences that are interconnected. An AI system counts as intelligent if it can spit out the right sentence at the right time and if it manipulates symbols in the appropriate way.

Symbolism underlies the Turing Test: if a machine says what it is supposed to say, it must know what it is talking about, since knowing what to say and when to say it exhausts knowledge. This, however, was subject to scathing criticism which has characterized symbolism ever since: just because a machine can talk about anything doesn’t mean it understands what it is talking about. Language doesn’t exhaust knowledge, but is simply a specific and limited kind of knowledge representation, a compressed form of knowledge. All languages, be they spoken, programming languages, or symbolic logic, rely on a representational schema, which excels at expressing discrete objects and properties and the relationships between them with an extremely high form of abstraction. There are arguments today that Symbolic AI, which was largely abandoned for the more statistical process of Deep Learning, is still necessary for hybrid form to achieve human level intelligence– that any AGI will require a Deep Learning + Symbolic AI hybrid. Perhaps the idea of morphisms and relations between pre-provided axioms might take hold in later attempts at combining deep learning and symbolic reasoning.

Regardless, all representational schemes involve compression of information. The type of information included in the compression may vary depending on the representational schema used. For instance, language-based representational schemes may have difficulty in conveying more concrete information, such as the motion of objects. However, non-linguistic representational schemes, such as images, recordings, graphs, maps, and the knowledge represented in trained neural networks, may be better suited to express this type of information in an accessible manner.

Language is a low bandwidth method for transmitting information. Words or sentences, isolated from context, convey very little information. Words can have multiple meanings depending on the context in which they are used. Chomsky pointed out for decades that language is just not a clear and unambiguous vehicle for communication. Still, there’s no need for a perfect vehicle for communication because we share a nonlinguistic understanding or context beyond language. In reading comprehension, for example, the amount of background knowledge one has on a topic is actually the key factor for success.

LLMs operate by discerning patterns at multiple levels in known texts, deciphering how words are connected and sentences are formed within larger passages. As a result, an LLM’s grasp of knowledge is highly contextual; words are understood not by their dictionary meanings but in terms of the roles they play in diverse collections of sentences. LLMs pick up on the background knowledge for each sentence and look to the context, the surrounding words, to piece together semantics. Because of this, they can accept infinitely more possibilities of sentences and phrases as input, and generate plausible and convincing, albeit Sycophantic, ways to complete text or continue a conversation.

Languages are external artifacts, useful for encoding infinitely many thoughts in infinitely many ways. While LLMs can rattle off convincing answers to questions and have an impressive breadth and depth of linguistic knowledge, they simply do not and cannot develop a world model consistent with human reasoning that is necessary for human-level AI. Children acquire what we call consciousness through lived experience, by exploring the world around them and learning from what they see, feel, touch and receiving rich, multi-modal feedback from their experiences. Nonlinguistic mental simulation in both animals and humans is useful for planning and predicting scenarios. It is used to craft, engineer, and reverse engineer artifacts. Generational knowledge or culture, often passed down through generations, is beyond the scope of LLMs. This is because nuanced iconic patterns of information are hard to express in language but remain accessible to humans through imitation, touch, and continual learning. While beyond the scope of language, these types of patterns are precisely the kind of context-sensitive information patterns neural networks excel at deciphering, encoding, and reasoning with.

LLMs are not general intelligence, despite claims otherwise. And while some proclaim scaling alone will take us to AGI, this is simply not the case. While text-to-anything applications have attracted a lot of attention and hype, and are arguably great progress, they rely on tremendous amounts of data and are better described as synthesizers than artificial intelligences, as they cannot reason. Scaling alone will not generate reasoning capabilities. That said, how we scale data efficiently, such that better results can be obtained through fewer data, is a primary challenge in research right now. As it currently stands, models are still black boxes which lack critical elements of human intelligence like common sense. Scaling laws, while promising, can not be relied upon as the final step to AGI.

While machine learning and AI solutions deployed across a breadth of industries are successfully solving many narrowly scoped problems and are creating economic value, the road to AGI is still a long one. That said, we may be entering an age of substantial, exponential progress in AI, including difficult areas like embodiment and in-context learning, with DeepMind releasing a paper in which an RL agent learns to adapt to novel environments in roughly the same timescale as humans, performs in-context learning, and exhibits scaling laws using meta-RL. We may be entering an age of extreme progress and utility.

Nevertheless, we also know AI lacks theory of mind, causal inference, common sense, the ability to extrapolate, a functional world model, and a body, so it is far from being at human-level in most complex tasks. There are challenges that will be difficult to surmount with deep-learning alone, even with scaling laws. It’s likely that innovative approaches involving both deep-learning and symbolic reasoning, perhaps from a set of value-driven axioms, will be needed to achieve anything close to human-level reasoning– and that doesn’t begin to cover alignment.

Wow, the CEO analogy with Saints, Sycophants, and Schemers really iluminated the alignment problem. Thank you for this insightful piece, it underscores the critical importance of solving AI alignment.